ㅤ

ㅤ

Manage Obsidian docs using MCP server and Codex CLI

This is second part of integrating AI tools with local documentation, check out first part about: CLI with Retrieval Augmented Generation + Obsidian docs

What is MCP brief overview

Model Context Protocol (MCP) is an open standard developed by Anthropic that enables AI assistants to securely connect to and interact with external data sources and tools in real-time. MCP acts as a bridge between AI models and various external systems, allowing the AI to:

- Access live data from databases, APIs, and services

- Use tools and execute functions

- Interact with local files and applications

- Connect to business systems securely

key components

ㅤ

MCP Server

~ Lightweight programs that expose specific capabilities

~ Can provide access to databases, file systems, APIs, or tools

~ Run locally or remotely

~ Examples: database connectors, file system access, web scrapers

MCP Client

~ Applications that connect to MCP servers

~ Examples: Claude Desktop, IDEs, LM Studio, custom applications

Data layer

~ Built on JSON-RPC 2.0, a lightweight remote procedure call protocol.

~ Exposes server features including tools, resources, and prompts.

~ Defines the message exchange format, including requests, responses, and notifications.

~ Manages the lifecycle of connections (initialization, capability negotiation, termination).

Transport layer

stdio - used mainly for local client/server interactions and prototyping (standard input/output streams)

streamable HTTP - transmits data over HTTP as a continuous stream rather than a single complete message. This approach allows the recipient to start processing data almost in real-time as it arrives, enabling near real-time token generation observation.

Server-Sent Events (SSE) - works together with HTTP (SSE+HTTP). It allows a server to push real-time updates to a web client over a single, long-lived HTTP connection without the client repeatedly requesting new data. As of today, this transport type has been replaced by Streamable HTTP and should be considered a legacy method.

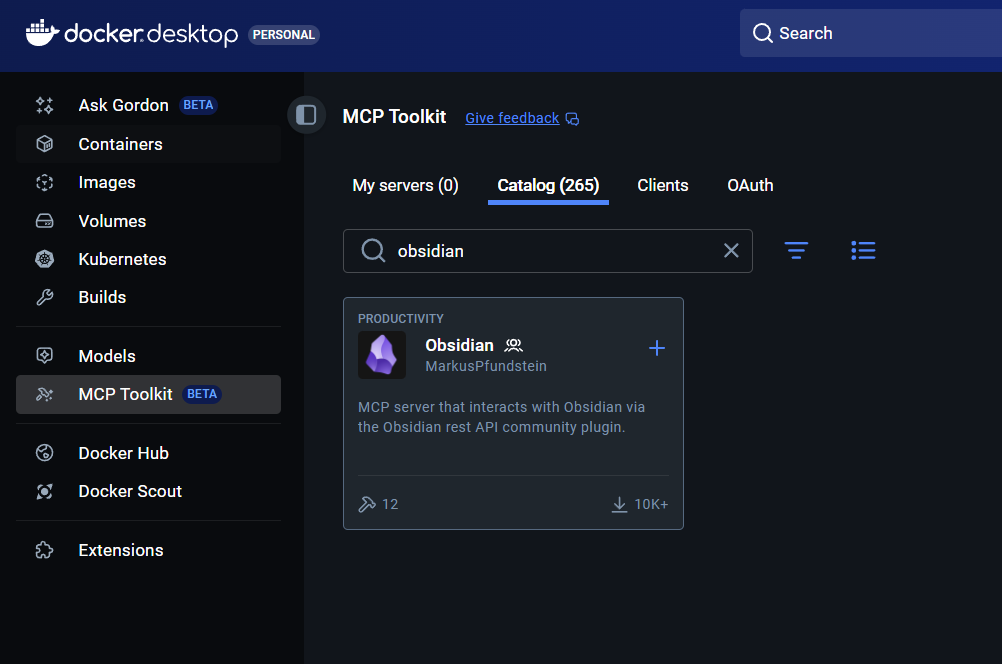

Docker Desktop as MCP server

MCP toolkit in Docker Desktop is still in beta, but there are already many MCP servers available mostly developed by community users.

prerequisites

~ Docker Desktop installed

~ installed Obsidian MCP server from MCP toolkit

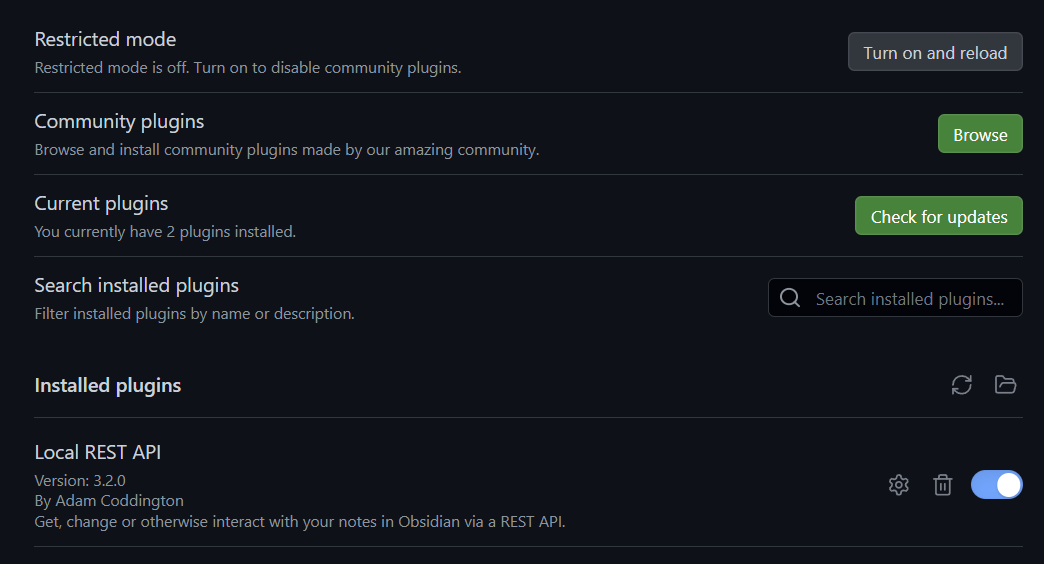

~ installed Obsidian community plugin (Local REST API) Obsidian community plugin

~ api key created from Local REST API Obsidian plugin

~ WSL2 configured in Windows environment

MCP Client

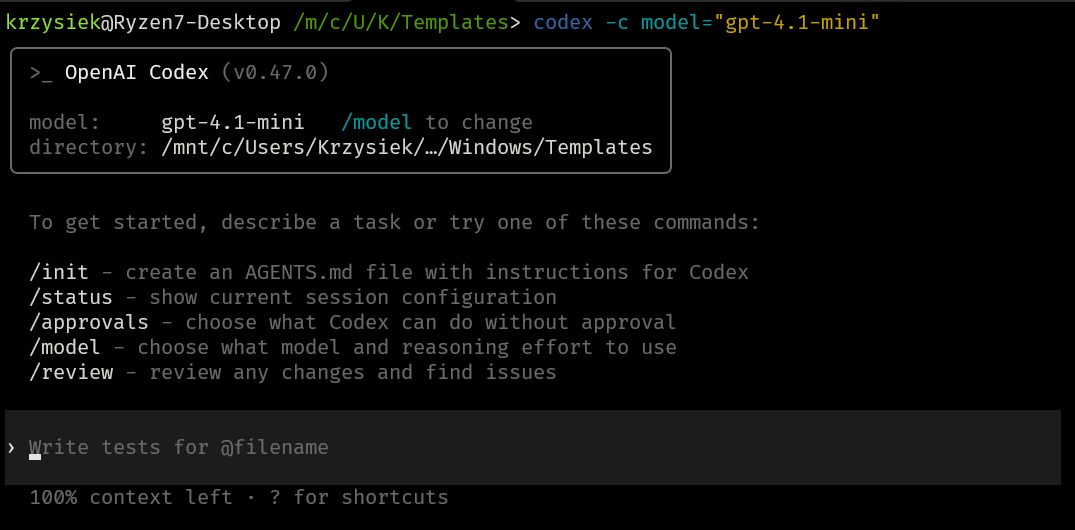

Codex CLI is a coding agent that can read, write, modify, run local code and also leverage MCP servers, making it very robust AI driven commandline interface

installing Codex

npm install -g @openai/codex

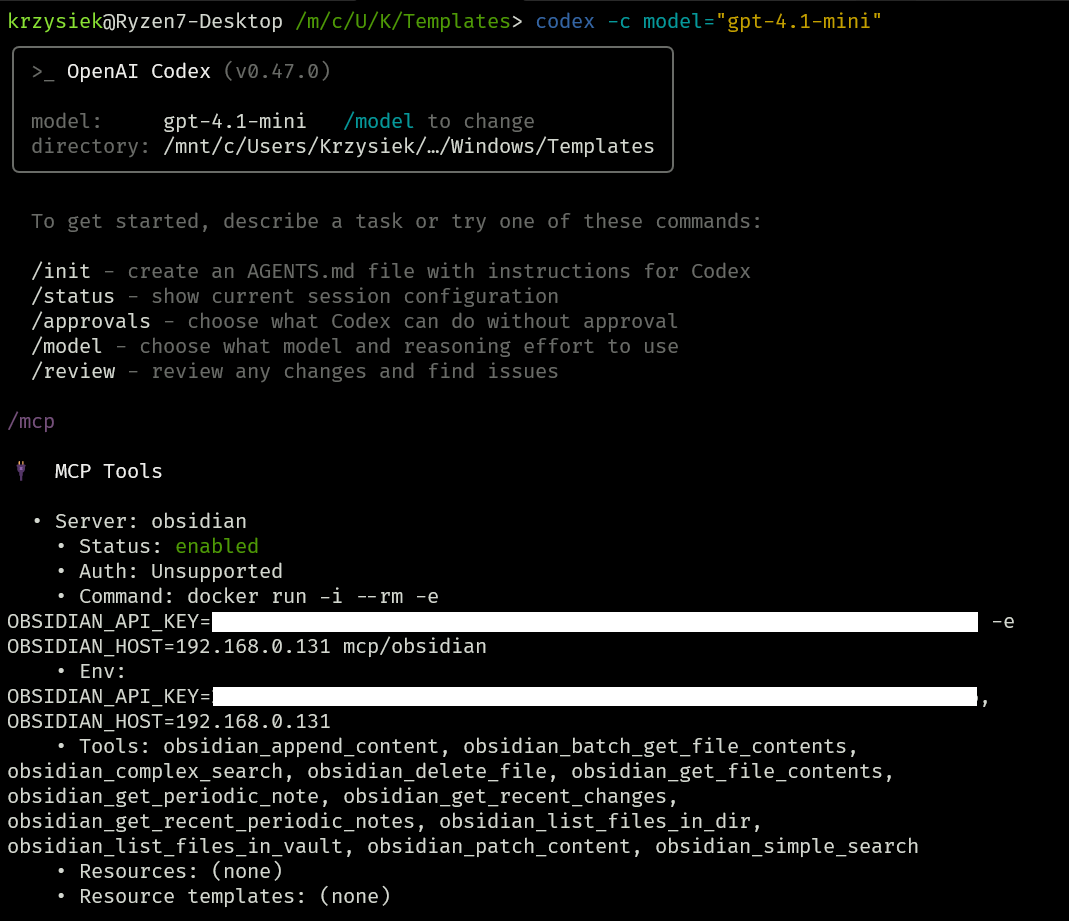

codex launch screen under WSL2

Now, the very important part is configuring the MCP server for the Codex client.

It’s slightly different from, for instance, Visual Studio Code configuration, as VSCode uses mcp.json, but Codex uses the TOML file format under ~/.codex/config.toml. It looks something like this:

# project is trusted workspace where Codex can perform various operations like:

# write/read/exec files, create folder structure and so on.

[projects."/mnt/c/Users/Krzysiek/AppData/Roaming/Microsoft/Windows/Templates"]

trust_level = "trusted"

[projects."/mnt/c/Users/Krzysiek"]

trust_level = "trusted"

[projects."/home/krzysiek"]

trust_level = "trusted"

### MCP server configuration goes here:

[mcp_servers.obsidian]

command = "docker"

args = ["run", "-i", "--rm", "-e", "OBSIDIAN_API_KEY=YOUR_API_KEY", "-e", "OBSIDIAN_HOST=IP_ADDR OR HOSTNAME", "mcp/obsidian"]

### optional configuration via Env vars:

env = { "OBSIDIAN_API_KEY" = "YOUR_API_KEY", "OBSIDIAN_HOST" = "IP_ADDR OR HOSTNAME" }

[mcp_servers.fetch]

command = "docker"

args = ["run", "-i", "--rm", "mcp/fetch"]

if everything is properly configured Codex should list all our MCP servers under /mcp slash command

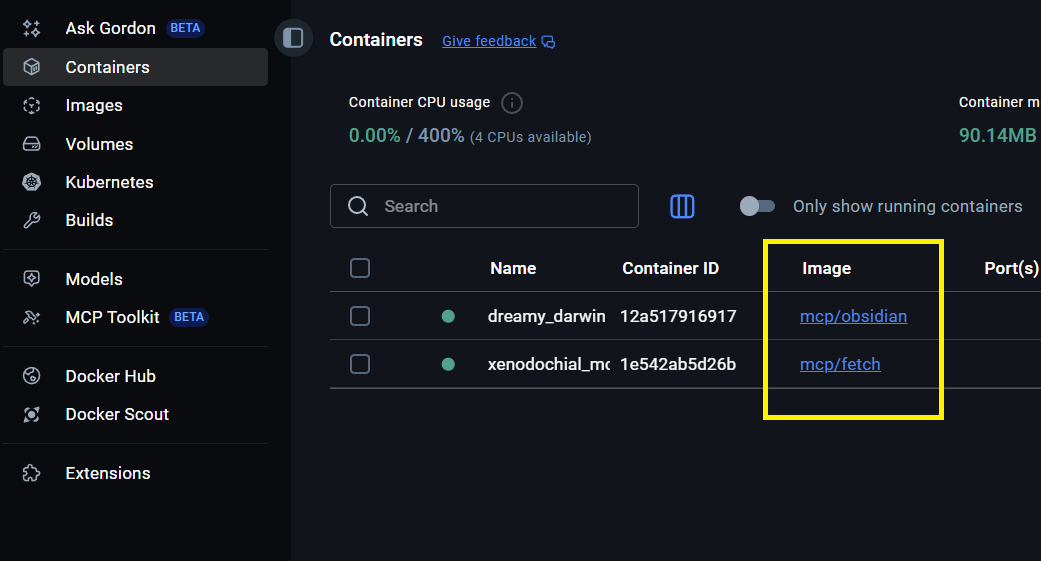

Docker MCP toolkit

While running Codex CLI, under the hood Docker spins up all MCP servers configured in ~/.codex/config.toml. Containers will be stopped and removed once you exit the CLI.

This is a neat way to save local resources when the CLI is not running, and you don’t need access to the underlying MCP servers. Please note that we have 2 MCP tools configured:

~ Obsidian (local REST API)

~ fetch (HTTP client for reading websites + conversion from HTML to markdown for efficient LLM feed)

showing docs structure

Showing structure looks similar to the RAG approach, but it works completely differently under the hood — MCP is using the Obsidian REST API as a tool to be called when necessary.

reading content from internet and saving as MD

Next, I tested both tools (fetch and obsidian) in a single request:

I wanted to read and summarize a blog post and then store it in my Obsidian vault. I chose an article from the Tailscale blog which was posted on October 24, 2025: https://tailscale.com/blog/nat-traversal-improvements-pt3-looking-ahead

It’s a fresh blog post, so LLMs don’t have that knowledge; it has to be included in the context window using the fetch tool. (By the way, Tailscale is a great product, I highly recommend it for anyone interested in VPN, security, and privacy. It’s not sponsored; I just like their product 😊)

You can see the results below:

This is how the summarized article saved locally as “nat-traversals.md” from the Obsidian perspective:

Summary

Integrating Obsidian with AI tools such as the MCP server, Codex CLI, and AIChat creates a powerful, flexible environment for managing and enhancing your local documentation using AI capabilities. By leveraging MCP’s real-time data access and tool execution, Codex’s command-line interface, and AIChat’s built-in vector search combined with retrieval-augmented generation (RAG) techniques, you can seamlessly browse, update, and enrich your notes with precise, context-aware responses directly from the CLI. Furthermore, it can be incorporated into automation workflows, such as CI/CD pipelines, enhancing continuous documentation updates and intelligent knowledge management throughout development processes.