Selfhosted ChatGPT accessible from anywhere you want

In this post I’ll show you how I built selfhosted version of ChatGPT that is more user friendly and also way cheaper! Entire solution is created with following components:

- Cloudflare tunnel (in my case I’m using cloudflared Docker image)

- Mattermost (opensource alternative to slack)

- Python bot (application uses OpenAI library as well as Mattermost bot client)

- Own domain (could be a cheap domain, but this required in order to use tunnel and expose it securely to the internet)

Let’s briefly describe each component for those who are unaware what they suppose to do:

Mattermost

Mattermost is a self-hosted, open-source messaging platform that can be used as an alternative to Slack. It offers similar features such as group messaging, file sharing, and integrations with other tools. However, since it is self-hosted, it gives users more control over their data and privacy. Mattermost is also highly customizable and can be tailored to meet specific team needs. In my case the most important feature is ability to implement custom bot we are going to do that using Python and Mattermost package mmpy_bot. Creating bot account is super easy once you have our application up and running, just follow official docs: https://docs.mattermost.com/integrations/cloud-bot-accounts.html

Cloudflare Tunnel

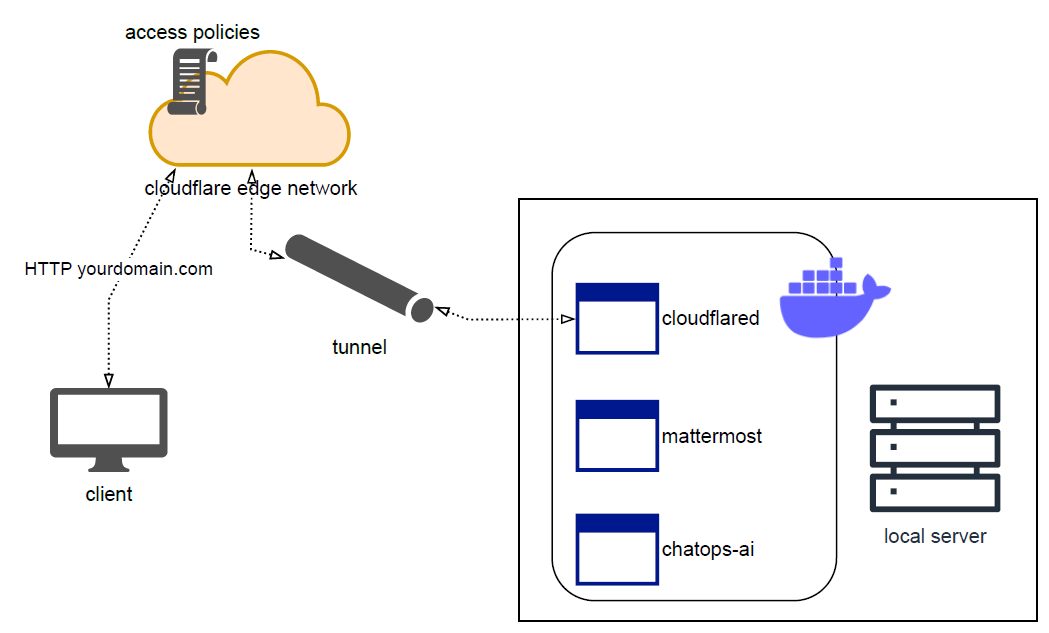

Cloudflare Tunnel (cloudflared) is a service offered by Cloudflare that allows you to expose your locally hosted web server to the internet using a secure tunnel. It is a tool that lets you connect your self-hosted web server to Cloudflare’s global network, making it accessible from anywhere in the world. Once Cloudflare Tunnel is running on your server (can be installed as systemd service or running as container) traffic to your domain name will be routed through the secure tunnel to your local web server. This allows you to host your own website or web application without having to expose your local network to the internet directly. I’m running tunnel as container and also created separate Docker network that will be used for cloudflared itself and other dependend services - Mattermost app and Python bot (chatops-ai) cloudflare also is able to secure your self-hosted application using following methods:

- Enable HTTPS: By default, Cloudflare Tunnel uses HTTPS to secure the connection between the client and the server (even if your selfhosted app is using http)

- Cloudflare Tunnel allows you to restrict access to your application by setting up access control rules. You can specify which IP addresses or CIDR ranges are allowed to access your application, and which ones are blocked

- It can restrict access to your application based on user identity. You can require users to authenticate with their email address, Google, or other identity providers before they can access your application

- Enable Rate Limiting it can be used to limit the number of requests that are allowed to be made to your application within a certain time period. This can help prevent DDoS attacks and protect your application from abuse

high level diagram of how cloudflare tunnel works:

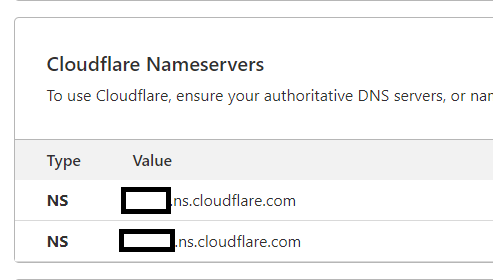

There is very good documentation about cloudflare tunnel and other “Zero trust” services: https://developers.cloudflare.com/cloudflare-one/connections/connect-apps In order to use cloudflare tunnel you need to make sure your domain points to cloudflare NS servers, when you create new tunnel those nameservers will be provided to you.

docker compose of 3 services:

- chatops-ai (python bot)

- cloudflared (tunnel)

- mattermost

version: "3.7"

services:

mattermost:

container_name: mattermost

image: mattermost/mattermost-preview:${MATTERMOST_VER}

restart: unless-stopped

environment:

- TZ=Europe/Warsaw

- MM_SERVICESETTINGS_SITEURL=http://localhost:8065

ports:

- 8065:8065

volumes:

- ./mattermost/data:/mm/mattermost-data

- ./mattermost/sql:/var/lib/mysql

- ./mattermost/config.json:/mm/mattermost/config/config.json

- ./mattermost/logs:/mm/mattermost/logs

networks:

- chatops

chatops-ai:

container_name: chatops-ai

image: chatops-ai:${ALPINE_PY_VER}

restart: unless-stopped

build:

context: ./chatops_bot/

dockerfile: Dockerfile

args:

- ALPINE_PY_VER=${ALPINE_PY_VER}

volumes:

- ./chatops_bot:/app

depends_on:

- mattermost

networks:

- chatops

cloudflared:

image: cloudflare/cloudflared:${CLOUDFLARED_VER}

container_name: cloudflare_tunnel

restart: unless-stopped

command: tunnel run

environment:

- TUNNEL_TOKEN=${CLOUDFLARED_TOKEN}

depends_on:

- chatops-ai

networks:

- chatops

networks:

chatops:

name: chatops

Python bot

My bot is basically python app that is running within the same Docker network as Mattermost and cloudflared it communicates with mattermost UI using websockets. Main responsibility of bot is to forward questions to OpenAI api and send back responses to the message thread (in other words to sender). There are 2 python packages required:

- openai: https://pypi.org/project/openai

- mmpy_bot: https://pypi.org/project/mmpy-bot

Storing chat context

chat context is basically chat history that can be passed to OpenAI api in subsequent chat calls. In order to maintain historical chat context we need to use some sort of storage. In my case I’m using redis database that is hosted somewhere else, but it can be any kind of storage i.e: cloud blob, sql/nosql db, file storage, etc. I’ve configured python app to write chat context as JSON payload.

Python Dockerfile

ARG ALPINE_PY_VER

FROM python:${ALPINE_PY_VER}

WORKDIR /app

COPY requirements.txt .

RUN \

apk add tzdata && \

cp /usr/share/zoneinfo/Europe/Warsaw /etc/localtime && \

echo "Europe/Warsaw" > /etc/timezone && \

apk del tzdata && \

pip install -r requirements.txt

ENTRYPOINT [ "python", "chatops_bot.py" ]

It’s also quite important which AI model we want to use. In my opinion gpt-3.5-turbo is the most attractive at the moment because it’s much cheaper than GPT-4 and at the same time it’s faster. However GPT-4 is more powerfull, which means it can give more comprehensive answer and solve more complex problems, so you should test yourself which one is better for your needs.

Python app (chatops-ai):

from mmpy_bot import Bot, Settings

from dotenv import load_dotenv

from mmpy_bot import Plugin, listen_to

from mmpy_bot import Message

import os, sys, re

from time import sleep

import json

import logging

from pathlib import Path

from redis import Redis

from dataclasses import dataclass

cwd = Path(__file__).parent

if not load_dotenv(dotenv_path=str(cwd) + '/.env'):

sys.exit(120)

r = Redis(os.environ['REDIS_HOST'])

logging.basicConfig(level=logging.INFO, format='%(asctime)s :: %(levelname)s :: %(message)s')

logger = logging.getLogger('chatops-ai')

@dataclass

class OpenAiModel():

gpt4o: str = 'gpt-4o-2024-05-13' # 128,000 tokens, Up to Oct 2023:

gpt4turbo: str = 'gpt-4-turbo-2024-04-09' # 128,000 tokens, Up to Dec 2023:

class ChatGPT():

def __init__(self, model: str, temperature: float = 0.5):

self.model = model

self.temperature = temperature

from openai import AsyncOpenAI

async def askgpt(self, question: str, chat_log: list[dict[str, str]] = None):

client = self.AsyncOpenAI(api_key=os.environ['OPEN_AI_KEY'], max_retries=3)

if not chat_log:

chat_log = [{

'role': 'system',

'content': 'Hello ChatGPT.',

}]

chat_log.append({'role': 'user', 'content': question})

completion = await client.chat.completions.create(model=self.model, messages=chat_log, temperature=self.temperature)

answer = completion.choices[0].message.content

chat_log.append({'role': 'assistant', 'content': answer})

return answer, chat_log

class MyPlugin(Plugin):

chat = ChatGPT(model=OpenAiModel.gpt4o)

pattern = '^--help|^--use-gpt4o|^--use-gpt4-turbo|^--clean-db|^--set-temp=[0-9]+.[0-9]+'

regexp = re.compile(pattern)

@listen_to(".*", direct_only=True, allowed_users=["krzysiek"])

async def bot_respond(self, message: Message):

if m := self.regexp.match(message.text):

match m.group():

case '--help':

msg = f'to use specific GPT model use following parameters:\n```--use-gpt4o```\n```--use-gpt4-turbo```\ncurrent model: {self.chat.model}, '

msg += f'temperature: {self.chat.temperature}\n'

msg += f'to change model temperature (from 0.0 to 2.0) use:\n```--set-temp=0.2```\n'

msg += f'to remove old context entries from database use:\n```--clean-db```\n\n'

self.driver.reply_to(message, msg)

case '--use-gpt4o':

self.chat = ChatGPT(model=OpenAiModel.gpt4o)

self.driver.reply_to(message, f'you are now using: {self.chat.model}')

case '--use-gpt4-turbo':

self.chat = ChatGPT(model=OpenAiModel.gpt4turbo)

self.driver.reply_to(message, f'you are now using: {self.chat.model}')

case '--clean-db':

keys_removed = init_db_cleanup()

if keys_removed:

r.delete(*keys_removed)

self.driver.reply_to(message, f'number of keys cleaned: {len(keys_removed)}')

case x if x.startswith('--set-temp='):

self.chat.temperature = float(x.split('=')[-1])

self.driver.reply_to(message,

f'temperature set to: {self.chat.temperature}, current model: {self.chat.model}'

)

else:

conv_thread = r.get(f'chatgpt:thread:{message.reply_id}')

conv_log = json.loads(conv_thread) if conv_thread else None

try:

answer, conv_log = await self.chat.askgpt(question=message.text, chat_log=conv_log)

except Exception as e:

logger.error(e)

self.driver.reply_to(message, "can you please repeat your question, I did not get it")

else:

log_line = dict(conv_log[-1])

log_line['question'] = message.text

logger.info(json.dumps(log_line, ensure_ascii=False))

r.set(f'chatgpt:thread:{message.reply_id}', json.dumps(conv_log, ensure_ascii=False))

self.driver.reply_to(message, answer)

def init_db_cleanup() -> list[str]:

import db_utils

thread_keys = [ i.decode('utf-8') for i in r.keys('chatgpt:thread:*') ]

to_remove = db_utils.get_old_keys(thread_keys)

_ = [logger.info(f'removing: {i}') for i in to_remove ]

return to_remove

def init_bot():

bot = Bot(

settings=Settings(

MATTERMOST_URL = os.environ['MATTERMOST_URL'],

MATTERMOST_PORT = os.environ['MATTERMOST_PORT'],

BOT_TOKEN = os.environ['BOT_TOKEN'],

BOT_TEAM = os.environ['BOT_TEAM'],

MATTERMOST_API_PATH = '',

SSL_VERIFY = False

),

plugins=[MyPlugin()]

)

bot.run()

def connect(timeout: float, retry: int):

cnt = 0

while True:

try:

init_bot()

except Exception as e:

if cnt > retry:

raise TimeoutError('too many retries exceeded', e)

sleep(timeout)

timeout *= 1.5

cnt += 1

logger.warning(f'retrying ({cnt}) connection')

if __name__ == '__main__':

logger.info('connecting to mattermost api...')

connect(timeout=1.5, retry=15)

db_utils.py:

from urllib.request import Request, urlopen

import os, json

username = os.environ['MM_USER']

passwd = os.environ['MM_PASS']

api_url = os.environ['MATTERMOST_URL']

api_port = os.environ['MATTERMOST_PORT']

mm_user_id = os.environ['MM_USER_ID']

mm_team_id = os.environ['MM_TEAM_ID']

def get_old_keys(redis_threads: list[str]) -> list[str]:

token_url = f'{api_url}:{api_port}/api/v4/users/login'

body = json.dumps({

"login_id": username,

"password": passwd

}).encode('utf-8')

req = urlopen(Request(url=token_url, data=body, headers={'Content-Type': 'application/json'}))

token = req.headers['token']

threads_url = f'{api_url}:{api_port}/api/v4/users/{mm_user_id}/teams/{mm_team_id}/threads'

r = urlopen(Request(threads_url, headers={'Authorization': f'Bearer {token}'}))

content = json.loads(r.read())

thread_ids = [ f"chatgpt:thread:{i['id']}" for i in content['threads'] ]

return [ k for k in redis_threads if k not in thread_ids ]

ChatGPT subscription vs OpenAI API

from my experience it looks like this approach (using API and gpt-3.5-turbo model) is way cheaper than paid subscription of chat.openai.com (20usd / month) of course it depends how much you are going to utilize the api and also how much text you are going to send as context. API pricing is not only based on number of requests but also the amount of data (tokens) processed, which may vary. In my case I calculated that my cost would be around 1-5 usd/month which is quite cheap. To understand pricing better I highly recommend to read OpenAI pricing docs: https://openai.com/pricing#language-models

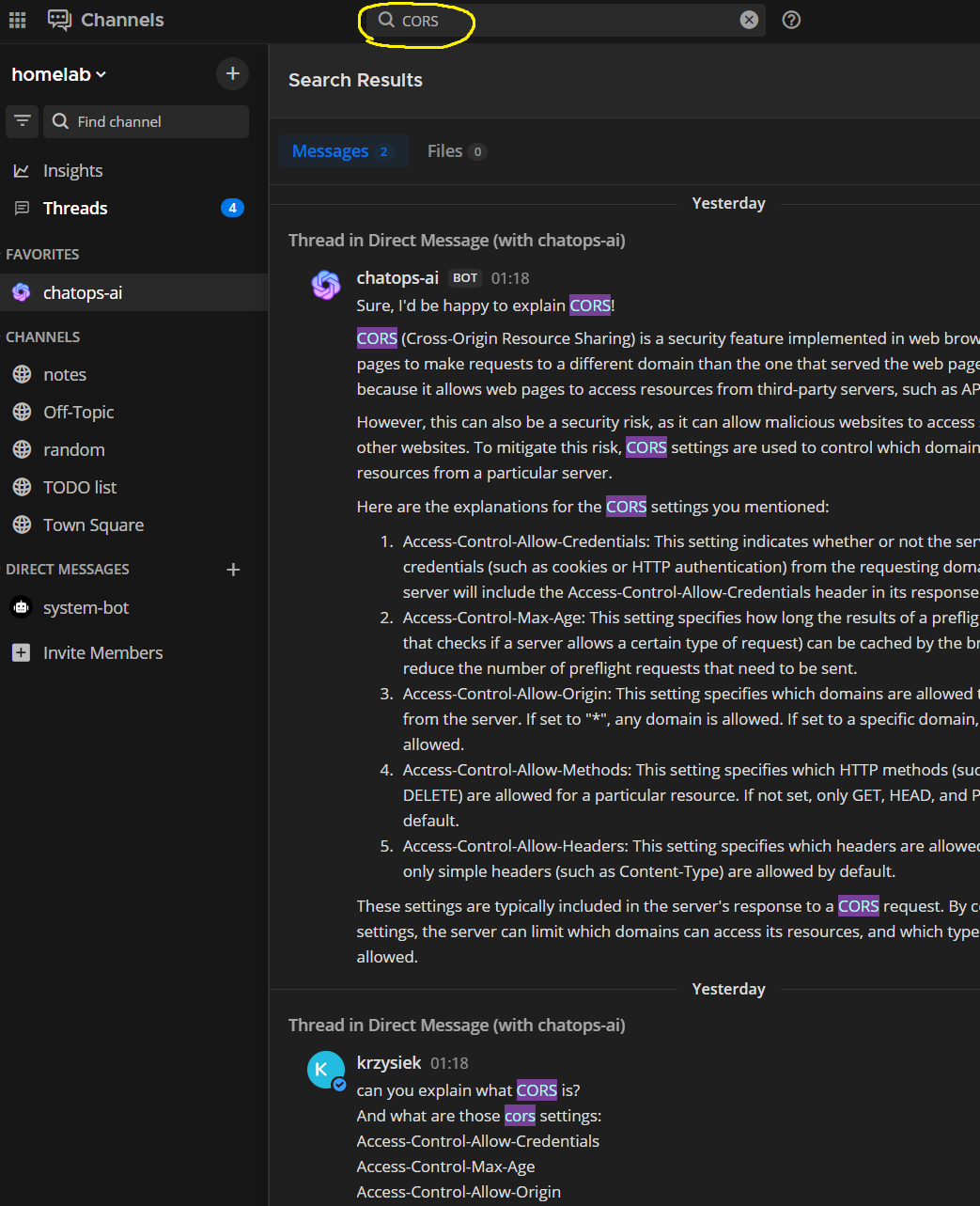

Better user experience

This might be quite important feature that currently is missing in original ChatGPT (https://chat.openai.com) which is full text search. Yes you are not able to scan your past conversations and find specific word, but for Mattermost this is not a problem, it can find whole words or part of it. For me personally this is dealbreaker. Of course there are other Mattermost features that can be utilized such as Incoming/Outgoing webhooks, slash commands and push notifications. Which gives you incredibly large number of possibilities to expand your application